Welcome! You are about to start on a journey to learn how to create web applications with Pythonand the Flask framework. The video above will give you an overview of the contents of this tutorial. In this first chapter, you are going to learn how to set up a Flask project. By the end of this chapter you are going to have a simple Flask web application running on your computer!

For your reference, below is a list of the articles in this series.

- Chapter 1: Hello, World! (this article)

- Chapter 2: Templates

- Chapter 3: Web Forms

- Chapter 4: Database

- Chapter 5: User Logins

- Chapter 6: Profile Page and Avatars

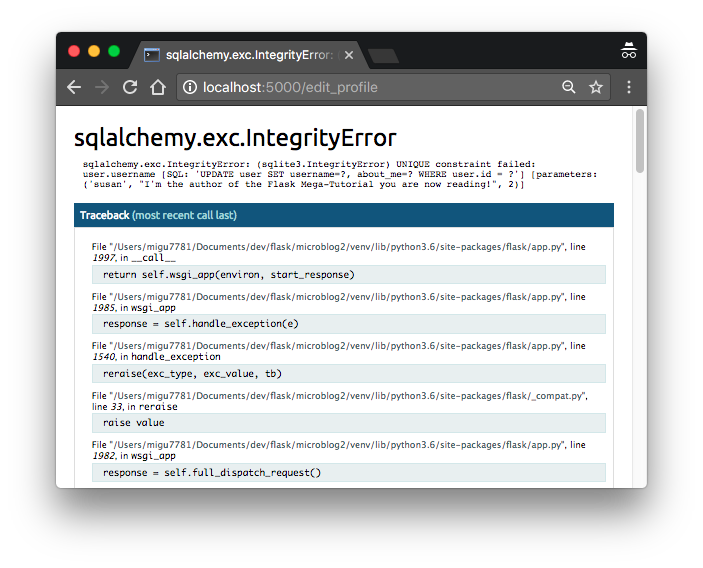

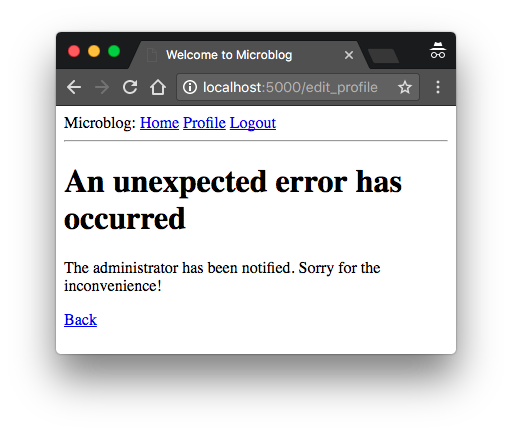

- Chapter 7: Error Handling

- Chapter 8: Followers

- Chapter 9: Pagination

- Chapter 10: Email Support

- Chapter 11: Facelift

- Chapter 12: Dates and Times

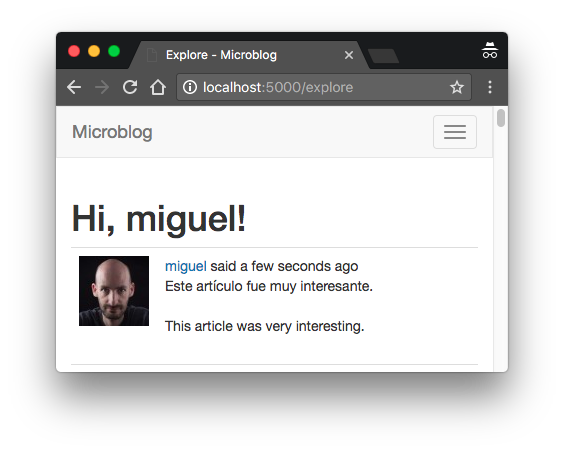

- Chapter 13: I18n and L10n

- Chapter 14: Ajax

- Chapter 15: A Better Application Structure

- Chapter 16: Full-Text Search

- Chapter 17: Deployment on Linux

- Chapter 18: Deployment on Heroku

- Chapter 19: Deployment on Docker Containers

- Chapter 20: Some JavaScript Magic

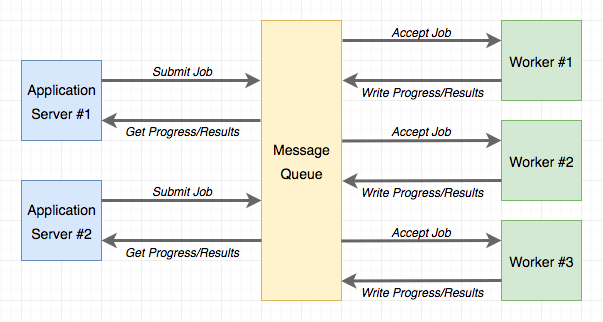

- Chapter 21: User Notifications

- Chapter 22: Background Jobs

- Chapter 23: Application Programming Interfaces (APIs)

Note 1: If you are looking for the legacy version of this tutorial, it’s here.

Note 2: If you would like to support my work on this blog, or just don’t have patience to wait for weekly articles, I am offering the complete version of this tutorial packaged as an ebook or a set of videos. For more information, visit courses.miguelgrinberg.com.

All the code examples presented in this tutorial are hosted on a GitHub repository. Downloading the code from GitHub can save you a lot of typing, but I strongly recommend that you type the code yourself, at least for the first few chapters. Once you become more familiar with Flask and the example application you can access the code directly from GitHub if the typing becomes too tedious.

At the beginning of each chapter, I’m going to give you three GitHub links that can be useful while you work through the chapter. The Browse link will open the GitHub repository for Microblog at the place where the changes for the chapter you are reading were added, without including any changes introduced in future chapters. The Zip link is a download link for a zip file including the entire application up to and including the changes in the chapter. The Diff link will open a graphical view of all the changes that were made in the chapter you are about to read.

The GitHub links for this chapter are: Browse, Zip, Diff.

Installing Python

If you don’t have Python installed on your computer, go ahead and install it now. If your operating system does not provide you with a Python package, you can download an installer from the Python official website. If you are using Microsoft Windows along with WSL or Cygwin, note that you will not be using the Windows native version of Python, but a Unix-friendly version that you need to obtain from Ubuntu (if you are using WSL) or from Cygwin.

To make sure your Python installation is functional, you can open a terminal window and type python3, or if that does not work, just python. Here is what you should expect to see:

$ python3

Python 3.5.2 (default, Nov 17 2016, 17:05:23)

[GCC 5.4.0 20160609] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> _

The Python interpreter is now waiting at an interactive prompt, where you can enter Python statements. In future chapters you will learn what kinds of things this interactive prompt is useful for. But for now, you have confirmed that Python is installed on your system. To exit the interactive prompt, you can type exit() and press Enter. On the Linux and Mac OS X versions of Python you can also exit the interpreter by pressing Ctrl-D. On Windows, the exit shortcut is Ctrl-Z followed by Enter.

Installing Flask

The next step is to install Flask, but before I go into that I want to tell you about the best practices associated with installing Python packages.

In Python, packages such as Flask are available in a public repository, from where anybody can download them and install them. The official Python package repository is called PyPI, which stands for Python Package Index (some people also refer to this repository as the “cheese shop”). Installing a package from PyPI is very simple, because Python comes with a tool called pip that does this work (in Python 2.7 pip does not come bundled with Python and needs to be installed separately).

To install a package on your machine, you use pip as follows:

$ pip install <package-name>

Interestingly, this method of installing packages will not work in most cases. If your Python interpreter was installed globally for all the users of your computer, chances are your regular user account is not going to have permission to make modifications to it, so the only way to make the command above work is to run it from an administrator account. But even without that complication, consider what happens when you install a package as above. The pip tool is going to download the package from PyPI, and then add it to your Python installation. From that point on, every Python script that you have on your system will have access to this package. Imagine a situation where you have completed a web application using version 0.11 of Flask, which was the most current version of Flask when you started, but now has been superseeded by version 0.12. You now want to start a second application, for which you’d like to use the 0.12 version, but if you replace the 0.11 version that you have installed you risk breaking your older application. Do you see the problem? It would be ideal if it was possible to install Flask 0.11 to be used by your old application, and also install Flask 0.12 for your new one.

To address the issue of maintaining different versions of packages for different applications, Python uses the concept of virtual environments. A virtual environment is a complete copy of the Python interpreter. When you install packages in a virtual environment, the system-wide Python interpreter is not affected, only the copy is. So the solution to have complete freedom to install any versions of your packages for each application is to use a different virtual environment for each application. Virtual environments have the added benefit that they are owned by the user who creates them, so they do not require an administrator account.

Let’s start by creating a directory where the project will live. I’m going to call this directory microblog, since that is the name of the application:

$ mkdir microblog

$ cd microblog

If you are using a Python 3 version, virtual environment support is included in it, so all you need to do to create one is this:

$ python3 -m venv venv

With this command, I’m asking Python to run the venv package, which creates a virtual environment named venv. The first venv in the command is the name of the Python virtual environment package, and the second is the virtual environment name that I’m going to use for this particular environment. If you find this confusing, you can replace the second venv with a different name that you want to assign to your virtual environment. In general I create my virtual environments with the name venv in the project directory, so whenever I cd into a project I find its corresponding virtual environment.

Note that in some operating systems you may need to use python instead of python3 in the command above. Some installations use python for Python 2.x releases and python3 for the 3.x releases, while others map python to the 3.x releases.

After the command completes, you are going to have a directory named venv where the virtual environment files are stored.

If you are using any version of Python older than 3.4 (and that includes the 2.7 release), virtual environments are not supported natively. For those versions of Python, you need to download and install a third-party tool called virtualenv before you can create virtual environments. Once virtualenv is installed, you can create a virtual environment with the following command:

$ virtualenv venv

Regardless of the method you used to create it, you should have your virtual environment created. Now you have to tell the system that you want to use it, and you do that by activating it. To activate your brand new virtual environment you use the following command:

$ source venv/bin/activate

(venv) $ _

If you are using a Microsoft Windows command prompt window, the activation command is slightly different:

$ venv\Scripts\activate

(venv) $ _

When you activate a virtual environment, the configuration of your terminal session is modified so that the Python interpreter stored inside it is the one that is invoked when you type python. Also, the terminal prompt is modified to include the name of the activated virtual environment. The changes made to your terminal session are all temporary and private to that session, so they will not persist when you close the terminal window. If you work with multiple terminal windows open at the same time, it is perfectly fine to have different virtual environments activated on each one.

Now that you have a virtual environment created and activated, you can finally install Flask in it:

(venv) $ pip install flask

If you want to confirm that your virtual environment now has Flask installed, you can start the Python interpreter and import Flask into it:

>>> import flask

>>> _

If this statement does not give you any errors you can congratulate yourself, as Flask is installed and ready to be used.

A “Hello, World” Flask Application

If you go to the Flask website, you are welcomed with a very simple example application that has just five lines of code. Instead of repeating that trivial example, I’m going to show you a slightly more elaborate one that will give you a good base structure for writing larger applications.

The application will exist in a package. In Python, a sub-directory that includes a __init__.py file is considered a package, and can be imported. When you import a package, the __init__.pyexecutes and defines what symbols the package exposes to the outside world.

Let’s create a package called app, that will host the application. Make sure you are in the microblog directory and then run the following command:

(venv) $ mkdir app

The __init__.py for the app package is going to contain the following code:

app/__init__.py: Flask application instance

from flask import Flask

app = Flask(__name__)

from app import routes

The script above simply creates the application object as an instance of class Flask imported from the flask package. The __name__ variable passed to the Flask class is a Python predefined variable, which is set to the name of the module in which it is used. Flask uses the location of the module passed here as a starting point when it needs to load associated resources such as template files, which I will cover in Chapter 2. For all practical purposes, passing __name__ is almost always going to configure Flask in the correct way. The application then imports the routes module, which doesn’t exist yet.

One aspect that may seem confusing at first is that there are two entities named app. The apppackage is defined by the app directory and the __init__.py script, and is referenced in the from app import routes statement. The app variable is defined as an instance of class Flask in the __init__.py script, which makes it a member of the app package.

Another peculiarity is that the routes module is imported at the bottom and not at the top of the script as it is always done. The bottom import is a workaround to circular imports, a common problem with Flask applications. You are going to see that the routes module needs to import the app variable defined in this script, so putting one of the reciprocal imports at the bottom avoids the error that results from the mutual references between these two files.

So what goes in the routes module? The routes are the different URLs that the application implements. In Flask, handlers for the application routes are written as Python functions, called view functions. View functions are mapped to one or more route URLs so that Flask knows what logic to execute when a client requests a given URL.

Here is your first view function, which you need to write in the new module named app/routes.py:

app/routes.py: Home page route

from app import app

@app.route('/')

@app.route('/index')

def index():

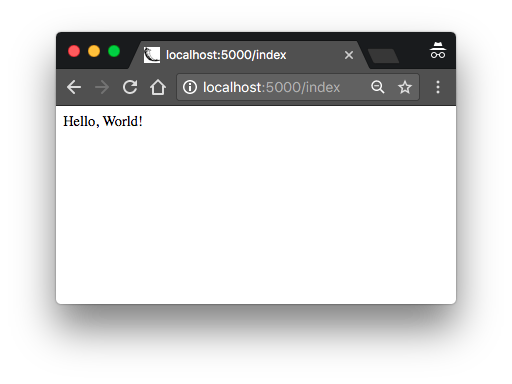

return "Hello, World!"

This view function is actually pretty simple, it just returns a greeting as a string. The two strange @app.route lines above the function are decorators, a unique feature of the Python language. A decorator modifies the function that follows it. A common pattern with decorators is to use them to register functions as callbacks for certain events. In this case, the @app.route decorator creates an association between the URL given as an argument and the function. In this example there are two decorators, which associate the URLs / and /index to this function. This means that when a web browser requests either of these two URLs, Flask is going to invoke this function and pass the return value of it back to the browser as a response. If this does not make complete sense yet, it will in a little bit when you run this application.

To complete the application, you need to have a Python script at the top-level that defines the Flask application instance. Let’s call this script microblog.py, and define it as a single line that imports the application instance:

microblog.py: Main application module

from app import app

Remember the two app entities? Here you can see both together in the same sentence. The Flask application instance is called app and is a member of the app package. The from app import app statement imports the app variable that is a member of the app package. If you find this confusing, you can rename either the package or the variable to something else.

Just to make sure that you are doing everything correctly, below you can see a diagram of the project structure so far:

microblog/

venv/

app/

__init__.py

routes.py

microblog.py

Believe it or not, this first version of the application is now complete! Before running it, though, Flask needs to be told how to import it, by setting the FLASK_APP environment variable:

(venv) $ export FLASK_APP=microblog.py

If you are using Microsoft Windows, use set instead of export in the command above.

Are you ready to be blown away? You can run your first web application, with the following command:

(venv) $ flask run

* Serving Flask app "microblog"

* Running on http://127.0.0.1:5000/ (Press CTRL+C to quit)

After the server initializes it will wait for client connections. The output from flask run indicates that the server is running on IP address 127.0.0.1, which is always the address of your own computer. This address is so common that is also has a simpler name that you may have seen before: localhost. Network servers listen for connections on a specific port number. Applications deployed on production web servers typically listen on port 443, or sometimes 80 if they do not implement encryption, but access to these ports require administration rights. Since this application is running in a development environment, Flask uses the freely available port 5000. Now open up your web browser and enter the following URL in the address field:

http://localhost:5000/

Alternatively you can use this other URL:

http://localhost:5000/index

Do you see the application route mappings in action? The first URL maps to /, while the second maps to /index. Both routes are associated with the only view function in the application, so they produce the same output, which is the string that the function returns. If you enter any other URL you will get an error, since only these two URLs are recognized by the application.

When you are done playing with the server you can just press Ctrl-C to stop it.

Congratulations, you have completed the first big step to become a web developer!

Before I end this chapter, I want to mention one more thing. Since environment variables aren’t remembered across terminal sessions, you may find tedious to always have to set the FLASK_APPenvironment variable when you open a new terminal window. Starting with version 1.0, Flask allows you to register environment variables that you want to be automatically imported when you run the flask command. To use this option you have to install the python-dotenv package:

(venv) $ pip install python-dotenv

Then you can just write the environment variable name and value in a .flaskenv file in the top-level directory of the project:

.flaskenv: Environment variables for flask command

FLASK_APP=microblog.py

Doing this is optional. If you prefer to set the environment variable manually, that is perfectly fine, as long as you always remember to do it.

The Flask Mega-Tutorial Part II: Templates

Note 1: If you are looking for the legacy version of this tutorial, it’s here.

Note 2: If you would like to support my work on this blog, or just don’t have patience to wait for weekly articles, I am offering the complete version of this tutorial packaged as an ebook or a set of videos. For more information, visit courses.miguelgrinberg.com.

After you complete Chapter 1, you should have a fully working, yet simple web application that has the following file structure:

microblog\

venv\

app\

__init__.py

routes.py

microblog.py

To run the application you set the FLASK_APP=microblog.py in your terminal session, and then execute flask run. This starts a web server with the application, which you can open by typing the http://localhost:5000/ URL in your web browser’s address bar.

In this chapter you will continue working on the same application, and in particular, you are going to learn how to generate more elaborate web pages that have a complex structure and many dynamic components. If anything about the application or the development workflow so far isn’t clear, please review Chapter 1 again before continuing.

The GitHub links for this chapter are: Browse, Zip, Diff.

What Are Templates?

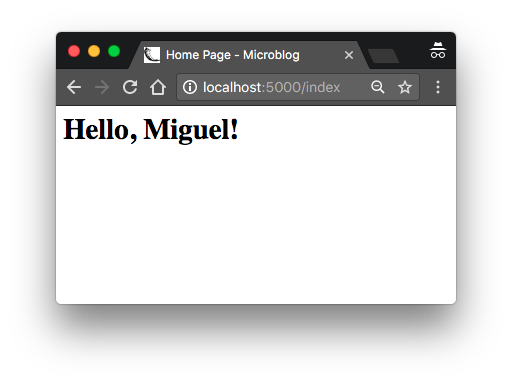

I want the home page of my microblogging application to have a heading that welcomes the user. For the moment, I’m going to ignore the fact that the application does not have the concept of users yet, as this is going to come later. Instead, I’m going to use a mock user, which I’m going to implement as a Python dictionary, as follows:

user = {'username': 'Miguel'}

Creating mock objects is a useful technique that allows you to concentrate on one part of the application without having to worry about other parts of the system that don’t exist yet. I want to design the home page of my application, and I don’t want the fact that I don’t have a user system in place to distract me, so I just make up a user object so that I can keep going.

The view function in the application returns a simple string. What I want to do now is expand that returned string into a complete HTML page, maybe something like this:

app/routes.py: Return complete HTML page from view function

from app import app

@app.route('/')

@app.route('/index')

def index():

user = {'username': 'Miguel'}

return '''

<html>

<head>

<title>Home Page - Microblog</title>

</head>

<body>

<h1>Hello, ''' + user['username'] + '''!</h1>

</body>

</html>'''

If you are not familiar with HTML, I recommend that you read HTML Markup on Wikipedia for a brief introduction.

Update the view function as shown above and give the application a try to see how it looks in your browser.

I hope you agree with me that the solution used above to deliver HTML to the browser is not good. Consider how complex the code in this view function will become when I have the blog posts from users, which are going to constantly change. The application is also going to have more view functions that are going to be associated with other URLs, so imagine if one day I decide to change the layout of this application, and have to update the HTML in every view function. This is clearly not an option that will scale as the application grows.

If you could keep the logic of your application separate from the layout or presentation of your web pages, then things would be much better organized, don’t you think? You could even hire a web designer to create a killer web site while you code the application logic in Python.

Templates help achieve this separation between presentation and business logic. In Flask, templates are written as separate files, stored in a templates folder that is inside the application package. So after making sure that you are in the microblog directory, create the directory where templates will be stored:

(venv) $ mkdir app/templates

Below you can see your first template, which is similar in functionality to the HTML page returned by the index() view function above. Write this file in app/templates/index.html:

app/templates/index.html: Main page template

<html>

<head>

<title>{{ title }} - Microblog</title>

</head>

<body>

<h1>Hello, {{ user.username }}!</h1>

</body>

</html>

This is a mostly standard, very simply HTML page. The only interesting thing in this page is that there are a couple of placeholders for the dynamic content, enclosed in {{ ... }} sections. These placeholders represent the parts of the page that are variable and will only be known at runtime.

Now that the presentation of the page was offloaded to the HTML template, the view function can be simplified:

app/routes.py: Use render\_template() function

from flask import render_template

from app import app

@app.route('/')

@app.route('/index')

def index():

user = {'username': 'Miguel'}

return render_template('index.html', title='Home', user=user)

This looks much better, right? Try this new version of the application to see how the template works. Once you have the page loaded in your browser, you may want to view the source HTML and compare it against the original template.

The operation that converts a template into a complete HTML page is called rendering. To render the template I had to import a function that comes with the Flask framework called render_template(). This function takes a template filename and a variable list of template arguments and returns the same template, but with all the placeholders in it replaced with actual values.

The render_template() function invokes the Jinja2 template engine that comes bundled with the Flask framework. Jinja2 substitutes {{ ... }} blocks with the corresponding values, given by the arguments provided in the render_template() call.

Conditional Statements

You have seen how Jinja2 replaces placeholders with actual values during rendering, but this is just one of many powerful operations Jinja2 supports in template files. For example, templates also support control statements, given inside {% ... %} blocks. The next version of the index.html template adds a conditional statement:

app/templates/index.html: Conditional statement in template

<html>

<head>

{% if title %}

<title>{{ title }} - Microblog</title>

{% else %}

<title>Welcome to Microblog!</title>

{% endif %}

</head>

<body>

<h1>Hello, {{ user.username }}!</h1>

</body>

</html>

Now the template is a bit smarter. If the view function forgets to pass a value for the titleplaceholder variable, then instead of showing an empty title the template will provide a default one. You can try how this conditional works by removing the title argument in the render_template() call of the view function.

Loops

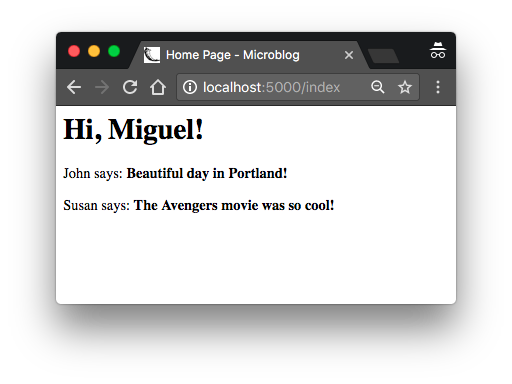

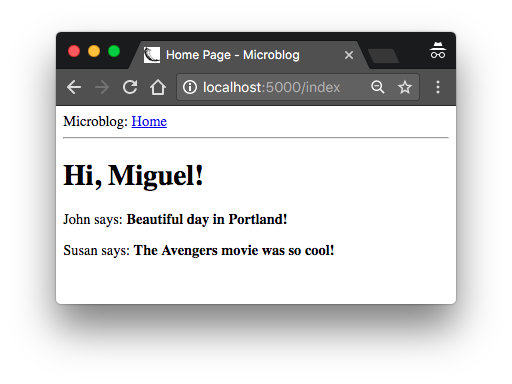

The logged in user will probably want to see recent posts from connected users in the home page, so what I’m going to do now is extend the application to support that.

Once again, I’m going to rely on the handy fake object trick to create some users and some posts to show:

app/routes.py: Fake posts in view function

from flask import render_template

from app import app

@app.route('/')

@app.route('/index')

def index():

user = {'username': 'Miguel'}

posts = [

{

'author': {'username': 'John'},

'body': 'Beautiful day in Portland!'

},

{

'author': {'username': 'Susan'},

'body': 'The Avengers movie was so cool!'

}

]

return render_template('index.html', title='Home', user=user, posts=posts)

To represent user posts I’m using a list, where each element is a dictionary that has author and body fields. When I get to implement users and blog posts for real I’m going to try to preserve these field names as much as possible, so that all the work I’m doing to design and test the home page template using these fake objects will continue to be valid when I introduce real users and posts.

On the template side I have to solve a new problem. The list of posts can have any number of elements, it is up to the view function to decide how many posts are going to be presented in the page. The template cannot make any assumptions about how many posts there are, so it needs to be prepared to render as many posts as the view sends in a generic way.

For this type of problem, Jinja2 offers a for control structure:

app/templates/index.html: for-loop in template

<html>

<head>

{% if title %}

<title>{{ title }} - Microblog</title>

{% else %}

<title>Welcome to Microblog</title>

{% endif %}

</head>

<body>

<h1>Hi, {{ user.username }}!</h1>

{% for post in posts %}

<div><p>{{ post.author.username }} says: <b>{{ post.body }}</b></p></div>

{% endfor %}

</body>

</html>

Simple, right? Give this new version of the application a try, and be sure to play with adding more content to the posts list to see how the template adapts and always renders all the posts the view function sends.

Template Inheritance

Most web applications these days have a navigation bar at the top of the page with a few frequently used links, such as a link to edit your profile, to login, logout, etc. I can easily add a navigation bar to the index.html template with some more HTML, but as the application grows I will be needing this same navigation bar in other pages. I don’t really want to have to maintain several copies of the navigation bar in many HTML templates, it is a good practice to not repeat yourself if that is possible.

Jinja2 has a template inheritance feature that specifically addresses this problem. In essence, what you can do is move the parts of the page layout that are common to all templates to a base template, from which all other templates are derived.

So what I’m going to do now is define a base template called base.html that includes a simple navigation bar and also the title logic I implemented earlier. You need to write the following template in file app/templates/base.html:

app/templates/base.html: Base template with navigation bar

<html>

<head>

{% if title %}

<title>{{ title }} - Microblog</title>

{% else %}

<title>Welcome to Microblog</title>

{% endif %}

</head>

<body>

<div>Microblog: <a href="/index">Home</a></div>

<hr>

{% block content %}{% endblock %}

</body>

</html>

In this template I used the block control statement to define the place where the derived templates can insert themselves. Blocks are given a unique name, which derived templates can reference when they provide their content.

With the base template in place, I can now simplify index.html by making it inherit from base.html:

app/templates/index.html: Inherit from base template

{% extends "base.html" %}

{% block content %}

<h1>Hi, {{ user.username }}!</h1>

{% for post in posts %}

<div><p>{{ post.author.username }} says: <b>{{ post.body }}</b></p></div>

{% endfor %}

{% endblock %}

Since the base.html template will now take care of the general page structure, I have removed all those elements from index.html and left only the content part. The extends statement establishes the inheritance link between the two templates, so that Jinja2 knows that when it is asked to render index.html it needs to embed it inside base.html. The two templates have matching block statements with name content, and this is how Jinja2 knows how to combine the two templates into one. Now if I need to create additional pages for the application, I can create them as derived templates from the same base.html template, and that is how I can have all the pages of the application sharing the same look and feel without duplication.

The Flask Mega-Tutorial Part III: Web Forms

Note 1: If you are looking for the legacy version of this tutorial, it’s here.

Note 2: If you would like to support my work on this blog, or just don’t have patience to wait for weekly articles, I am offering the complete version of this tutorial packaged as an ebook or a set of videos. For more information, visit courses.miguelgrinberg.com.

In Chapter 2 I created a simple template for the home page of the application, and used fake objects as placeholders for things I don’t have yet, like users or blog posts. In this chapter I’m going to address one of the many holes I still have in this application, specifically how to accept input from users through web forms.

Web forms are one of the most basic building blocks in any web application. I will be using forms to allow users to submit blog posts, and also for logging in to the application.

Before you proceed with this chapter, make sure you have the microblog application as I left it in the previous chapter installed, and that you can run it without any errors.

The GitHub links for this chapter are: Browse, Zip, Diff.

Introduction to Flask-WTF

To handle the web forms in this application I’m going to use the Flask-WTF extension, which is a thin wrapper around the WTForms package that nicely integrates it with Flask. This is the first Flask extension that I’m presenting to you, but it is not going to be the last. Extensions are a very important part of the Flask ecosystem, as they provide solutions to problems that Flask is intentionally not opinionated about.

Flask extensions are regular Python packages that are installed with pip. You can go ahead and install Flask-WTF in your virtual environment:

(venv) $ pip install flask-wtf

Configuration

So far the application is very simple, and for that reason I did not need to worry about its configuration. But for any applications except the simplest ones, you are going to find that Flask (and possibly also the Flask extensions that you use) offer some amount of freedom in how to do things, and you need to make some decisions, which you pass to the framework as a list of configuration variables.

There are several formats for the application to specify configuration options. The most basic solution is to define your variables as keys in app.config, which uses a dictionary style to work with variables. For example, you could do something like this:

app = Flask(__name__)

app.config['SECRET_KEY'] = 'you-will-never-guess'

# ... add more variables here as needed

While the above syntax is sufficient to create configuration options for Flask, I like to enforce the principle of separation of concerns, so instead of putting my configuration in the same place where I create my application I will use a slightly more elaborate structure that allows me to keep my configuration in a separate file.

A format that I really like because it is very extensible, is to use a class to store configuration variables. To keep things nicely organized, I’m going to create the configuration class in a separate Python module. Below you can see the new configuration class for this application, stored in a config.py module in the top-level directory.

config.py: Secret key configuration

import os

class Config(object):

SECRET_KEY = os.environ.get('SECRET_KEY') or 'you-will-never-guess'

Pretty simple, right? The configuration settings are defined as class variables inside the Configclass. As the application needs more configuration items, they can be added to this class, and later if I find that I need to have more than one configuration set, I can create subclasses of it. But don’t worry about this just yet.

The SECRET_KEY configuration variable that I added as the only configuration item is an important part in most Flask applications. Flask and some of its extensions use the value of the secret key as a cryptographic key, useful to generate signatures or tokens. The Flask-WTF extension uses it to protect web forms against a nasty attack called Cross-Site Request Forgery or CSRF (pronounced “seasurf”). As its name implies, the secret key is supposed to be secret, as the strength of the tokens and signatures generated with it depends on no person outside of the trusted maintainers of the application knowing it.

The value of the secret key is set as an expression with two terms, joined by the or operator. The first term looks for the value of an environment variable, also called SECRET_KEY. The second term, is just a hardcoded string. This is a pattern that you will see me repeat often for configuration variables. The idea is that a value sourced from an environment variable is preferred, but if the environment does not define the variable, then the hardcoded string is used instead. When you are developing this application, the security requirements are low, so you can just ignore this setting and let the hardcoded string be used. But when this application is deployed on a production server, I will be setting a unique and difficult to guess value in the environment, so that the server has a secure key that nobody else knows.

Now that I have a config file, I need to tell Flask to read it and apply it. That can be done right after the Flask application instance is created using the app.config.from_object() method:

app/__init__.py: Flask configuration

from flask import Flask

from config import Config

app = Flask(__name__)

app.config.from_object(Config)

from app import routes

The way I’m importing the Config class may seem confusing at first, but if you look at how the Flask class (uppercase “F”) is imported from the flask package (lowercase “f”) you’ll notice that I’m doing the same with the configuration. The lowercase “config” is the name of the Python module config.py, and obviously the one with the uppercase “C” is the actual class.

As I mentioned above, the configuration items can be accessed with a dictionary syntax from app.config. Here you can see a quick session with the Python interpreter where I check what is the value of the secret key:

>>> from microblog import app

>>> app.config['SECRET_KEY']

'you-will-never-guess'

User Login Form

The Flask-WTF extension uses Python classes to represent web forms. A form class simply defines the fields of the form as class variables.

Once again having separation of concerns in mind, I’m going to use a new app/forms.py module to store my web form classes. To begin, let’s define a user login form, which asks the user to enter a username and a password. The form will also include a “remember me” check box, and a submit button:

app/forms.py: Login form

from flask_wtf import FlaskForm

from wtforms import StringField, PasswordField, BooleanField, SubmitField

from wtforms.validators import DataRequired

class LoginForm(FlaskForm):

username = StringField('Username', validators=[DataRequired()])

password = PasswordField('Password', validators=[DataRequired()])

remember_me = BooleanField('Remember Me')

submit = SubmitField('Sign In')

Most Flask extensions use a flask_<name> naming convention for their top-level import symbol. In this case, Flask-WTF has all its symbols under flask_wtf. This is where the FlaskForm base class is imported from at the top of app/forms.py.

The four classes that represent the field types that I’m using for this form are imported directly from the WTForms package, since the Flask-WTF extension does not provide customized versions. For each field, an object is created as a class variable in the LoginForm class. Each field is given a description or label as a first argument.

The optional validators argument that you see in some of the fields is used to attach validation behaviors to fields. The DataRequired validator simply checks that the field is not submitted empty. There are many more validators available, some of which will be used in other forms.

Form Templates

The next step is to add the form to an HTML template so that it can be rendered on a web page. The good news is that the fields that are defined in the LoginForm class know how to render themselves as HTML, so this task is fairly simple. Below you can see the login template, which I’m going to store in file app/templates/login.html:

app/templates/login.html: Login form template

{% extends "base.html" %}

{% block content %}

<h1>Sign In</h1>

<form action="" method="post" novalidate>

{{ form.hidden_tag() }}

<p>

{{ form.username.label }}<br>

{{ form.username(size=32) }}

</p>

<p>

{{ form.password.label }}<br>

{{ form.password(size=32) }}

</p>

<p>{{ form.remember_me() }} {{ form.remember_me.label }}</p>

<p>{{ form.submit() }}</p>

</form>

{% endblock %}

For this template I’m reusing one more time the base.html template as shown in Chapter 2, through the extends template inheritance statement. I will actually do this with all the templates, to ensure a consistent layout that includes a top navigation bar across all the pages of the application.

This template expects a form object instantiated from the LoginForm class to be given as an argument, which you can see referenced as form. This argument will be sent by the login view function, which I still haven’t written.

The HTML <form> element is used as a container for the web form. The action attribute of the form is used to tell the browser the URL that should be used when submitting the information the user entered in the form. When the action is set to an empty string the form is submitted to the URL that is currently in the address bar, which is the URL that rendered the form on the page. The method attribute specifies the HTTP request method that should be used when submitting the form to the server. The default is to send it with a GET request, but in almost all cases, using a POST request makes for a better user experience because requests of this type can submit the form data in the body of the request, while GET requests add the form fields to the URL, cluttering the browser address bar. The novalidate attribute is used to tell the web browser to not apply validation to the fields in this form, which effectively leaves this task to the Flask application running in the server. Using novalidate is entirely optional, but for this first form it is important that you set it because this will allow you to test server-side validation later in this chapter.

The form.hidden_tag() template argument generates a hidden field that includes a token that is used to protect the form against CSRF attacks. All you need to do to have the form protected is include this hidden field and have the SECRET_KEY variable defined in the Flask configuration. If you take care of these two things, Flask-WTF does the rest for you.

If you’ve written HTML web forms in the past, you may have found it odd that there are no HTML fields in this template. This is because the fields from the form object know how to render themselves as HTML. All I needed to do was to include {{ form.<field_name>.label }} where I wanted the field label, and {{ form.<field_name>() }} where I wanted the field. For fields that require additional HTML attributes, those can be passed as arguments. The username and password fields in this template take the size as an argument that will be added to the <input>HTML element as an attribute. This is how you can also attach CSS classes or IDs to form fields.

Form Views

The final step before you can see this form in the browser is to code a new view function in the application that renders the template from the previous section.

So let’s write a new view function mapped to the /login URL that creates a form, and passes it to the template for rendering. This view function can also go in the app/routes.py module with the previous one:

app/routes.py: Login view function

from flask import render_template

from app import app

from app.forms import LoginForm

# ...

@app.route('/login')

def login():

form = LoginForm()

return render_template('login.html', title='Sign In', form=form)

What I did here is import the LoginForm class from forms.py, instantiated an object from it, and sent it down to the template. The form=form syntax may look odd, but is simply passing the formobject created in the line above (and shown on the right side) to the template with the name form(shown on the left). This is all that is required to get form fields rendered.

To make it easy to access the login form, the base template can include a link to it in the navigation bar:

app/templates/base.html: Login link in navigation bar

<div>

Microblog:

<a href="/index">Home</a>

<a href="/login">Login</a>

</div>

At this point you can run the application and see the form in your web browser. With the application running, type http://localhost:5000/ in the browser’s address bar, and then click on the “Login” link in the top navigation bar to see the new login form. Pretty cool, right?

Receiving Form Data

If you try to press the submit button the browser is going to display a “Method Not Allowed” error. This is because the login view function from the previous section does one half of the job so far. It can display the form on a web page, but it has no logic to process data submitted by the user yet. This is another area where Flask-WTF makes the job really easy. Here is an updated version of the view function that accepts and validates the data submitted by the user:

app/routes.py: Receiving login credentials

from flask import render_template, flash, redirect

@app.route('/login', methods=['GET', 'POST'])

def login():

form = LoginForm()

if form.validate_on_submit():

flash('Login requested for user {}, remember_me={}'.format(

form.username.data, form.remember_me.data))

return redirect('/index')

return render_template('login.html', title='Sign In', form=form)

The first new thing in this version is the methods argument in the route decorator. This tells Flask that this view function accepts GET and POST requests, overriding the default, which is to accept only GET requests. The HTTP protocol states that GET requests are those that return information to the client (the web browser in this case). All the requests in the application so far are of this type. POST requests are typically used when the browser submits form data to the server (in reality GET requests can also be used for this purpose, but it is not a recommended practice). The “Method Not Allowed” error that the browser showed you before, appears because the browser tried to send a POST request and the application was not configured to accept it. By providing the methods argument, you are telling Flask which request methods should be accepted.

The form.validate_on_submit() method does all the form processing work. When the browser sends the GET request to receive the web page with the form, this method is going to return False, so in that case the function skips the if statement and goes directly to render the template in the last line of the function.

When the browser sends the POST request as a result of the user pressing the submit button, form.validate_on_submit() is going to gather all the data, run all the validators attached to fields, and if everything is all right it will return True, indicating that the data is valid and can be processed by the application. But if at least one field fails validation, then the function will return False, and that will cause the form to be rendered back to the user, like in the GET request case. Later I’m going to add an error message when validation fails.

When form.validate_on_submit() returns True, the login view function calls two new functions, imported from Flask. The flash() function is a useful way to show a message to the user. A lot of applications use this technique to let the user know if some action has been successful or not. In this case, I’m going to use this mechanism as a temporary solution, because I don’t have all the infrastructure necessary to log users in for real yet. The best I can do for now is show a message that confirms that the application received the credentials.

The second new function used in the login view function is redirect(). This function instructs the client web browser to automatically navigate to a different page, given as an argument. This view function uses it to redirect the user to the index page of the application.

When you call the flash() function, Flask stores the message, but flashed messages will not magically appear in web pages. The templates of the application need to render these flashed messages in a way that works for the site layout. I’m going to add these messages to the base template, so that all the templates inherit this functionality. This is the updated base template:

app/templates/base.html: Flashed messages in base template

<html>

<head>

{% if title %}

<title>{{ title }} - microblog</title>

{% else %}

<title>microblog</title>

{% endif %}

</head>

<body>

<div>

Microblog:

<a href="/index">Home</a>

<a href="/login">Login</a>

</div>

<hr>

{% with messages = get_flashed_messages() %}

{% if messages %}

<ul>

{% for message in messages %}

<li>{{ message }}</li>

{% endfor %}

</ul>

{% endif %}

{% endwith %}

{% block content %}{% endblock %}

</body>

</html>

Here I’m using a with construct to assign the result of calling get_flashed_messages() to a messages variable, all in the context of the template. The get_flashed_messages() function comes from Flask, and returns a list of all the messages that have been registered with flash()previously. The conditional that follows checks if messages has some content, and in that case, a <ul> element is rendered with each message as a <li> list item. This style of rendering does not look great, but the topic of styling the web application will come later.

An interesting property of these flashed messages is that once they are requested once through the get_flashed_messages function they are removed from the message list, so they appear only once after the flash() function is called.

This is a great time to try the application one more time and test how the form works. Make sure you try submitting the form with the username or password fields empty, to see how the DataRequired validator halts the submission process.

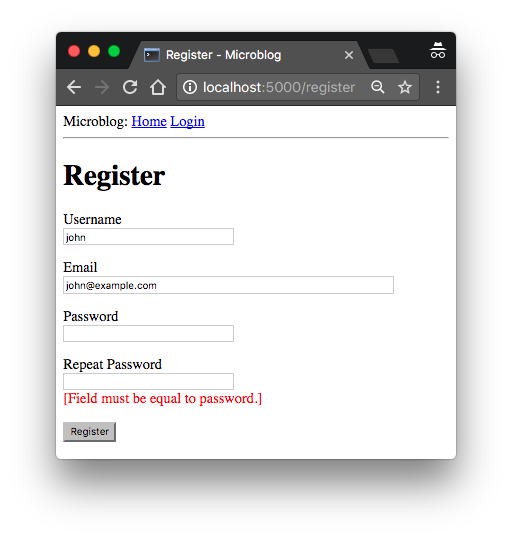

Improving Field Validation

The validators that are attached to form fields prevent invalid data from being accepted into the application. The way the application deals with invalid form input is by re-displaying the form, to let the user make the necessary corrections.

If you tried to submit invalid data, I’m sure you noticed that while the validation mechanisms work well, there is no indication given to the user that something is wrong with the form, the user simply gets the form back. The next task is to improve the user experience by adding a meaningful error message next to each field that failed validation.

In fact, the form validators generate these descriptive error messages already, so all that is missing is some additional logic in the template to render them.

Here is the login template with added field validation messages in the username and password fields:

app/templates/login.html: Validation errors in login form template

{% extends "base.html" %}

{% block content %}

<h1>Sign In</h1>

<form action="" method="post" novalidate>

{{ form.hidden_tag() }}

<p>

{{ form.username.label }}<br>

{{ form.username(size=32) }}<br>

{% for error in form.username.errors %}

<span style="color: red;">[{{ error }}]</span>

{% endfor %}

</p>

<p>

{{ form.password.label }}<br>

{{ form.password(size=32) }}<br>

{% for error in form.password.errors %}

<span style="color: red;">[{{ error }}]</span>

{% endfor %}

</p>

<p>{{ form.remember_me() }} {{ form.remember_me.label }}</p>

<p>{{ form.submit() }}</p>

</form>

{% endblock %}

The only change I’ve made is to add for loops right after the username and password fields that render the error messages added by the validators in red color. As a general rule, any fields that have validators attached will have error messages added under form.<field_name>.errors. This is going to be a list, because fields can have multiple validators attached and more than one may be providing error messages to display to the user.

If you try to submit the form with an empty username or password, you will now get a nice error message in red.

Generating Links

The login form is fairly complete now, but before closing this chapter I wanted to discuss the proper way to include links in templates and redirects. So far you have seen a few instances in which links are defined. For example, this is the current navigation bar in the base template:

<div>

Microblog:

<a href="/index">Home</a>

<a href="/login">Login</a>

</div>

The login view function also defines a link that is passed to the redirect() function:

@app.route('/login', methods=['GET', 'POST'])

def login():

form = LoginForm()

if form.validate_on_submit():

# ...

return redirect('/index')

# ...

One problem with writing links directly in templates and source files is that if one day you decide to reorganize your links, then you are going to have to search and replace these links in your entire application.

To have better control over these links, Flask provides a function called url_for(), which generates URLs using its internal mapping of URLs to view functions. For example, url_for('login') returns /login, and url_for('index') return '/index. The argument to url_for() is the endpoint name, which is the name of the view function.

You may ask why is it better to use the function names instead of URLs. The fact is that URLs are much more likely to change than view function names, which are completely internal. A secondary reason is that as you will learn later, some URLs have dynamic components in them, so generating those URLs by hand would require concatenating multiple elements, which is tedious and error prone. The url_for() is also able to generate these complex URLs.

So from now on, I’m going to use url_for() every time I need to generate an application URL. The navigation bar in the base template then becomes:

app/templates/base.html: Use url\_for() function for links

<div>

Microblog:

<a href="{{ url_for('index') }}">Home</a>

<a href="{{ url_for('login') }}">Login</a>

</div>

And here is the updated login() view function:

app/routes.py: Use url\_for() function for links

from flask import render_template, flash, redirect, url_for

# ...

@app.route('/login', methods=['GET', 'POST'])

def login():

form = LoginForm()

if form.validate_on_submit():

# ...

return redirect(url_for('index'))

# ...

| i |

The Flask Mega-Tutorial Part IV: Database

The topic of this chapter is extremely important. For most applications, there is going to be a need to maintain persistent data that can be retrieved efficiently, and this is exactly what databases are made for.

The GitHub links for this chapter are: Browse, Zip, Diff.

Databases in Flask

As I’m sure you have heard already, Flask does not support databases natively. This is one of the many areas in which Flask is intentionally not opinionated, which is great, because you have the freedom to choose the database that best fits your application instead of being forced to adapt to one.

There are great choices for databases in Python, many of them with Flask extensions that make a better integration with the application. The databases can be separated into two big groups, those that follow the relational model, and those that do not. The latter group is often called NoSQL, indicating that they do not implement the popular relational query language SQL. While there are great database products in both groups, my opinion is that relational databases are a better match for applications that have structured data such as lists of users, blog posts, etc., while NoSQL databases tend to be better for data that has a less defined structure. This application, like most others, can be implemented using either type of database, but for the reasons stated above, I’m going to go with a relational database.

In Chapter 3 I showed you a first Flask extension. In this chapter I’m going to use two more. The first is Flask-SQLAlchemy, an extension that provides a Flask-friendly wrapper to the popular SQLAlchemy package, which is an Object Relational Mapper or ORM. ORMs allow applications to manage a database using high-level entities such as classes, objects and methods instead of tables and SQL. The job of the ORM is to translate the high-level operations into database commands.

The nice thing about SQLAlchemy is that it is an ORM not for one, but for many relational databases. SQLAlchemy supports a long list of database engines, including the popular MySQL, PostgreSQL and SQLite. This is extremely powerful, because you can do your development using a simple SQLite database that does not require a server, and then when the time comes to deploy the application on a production server you can choose a more robust MySQL or PostgreSQL server, without having to change your application.

To install Flask-SQLAlchemy in your virtual environment, make sure you have activated it first, and then run:

(venv) $ pip install flask-sqlalchemy

Database Migrations

Most database tutorials I’ve seen cover creation and use of a database, but do not adequately address the problem of making updates to an existing database as the application needs change or grow. This is hard because relational databases are centered around structured data, so when the structure changes the data that is already in the database needs to be migrated to the modified structure.

The second extension that I’m going to present in this chapter is Flask-Migrate, which is actually one created by yours truly. This extension is a Flask wrapper for Alembic, a database migration framework for SQLAlchemy. Working with database migrations adds a bit of work to get a database started, but that is a small price to pay for a robust way to make changes to your database in the future.

The installation process for Flask-Migrate is similar to other extensions you have seen:

(venv) $ pip install flask-migrate

Flask-SQLAlchemy Configuration

During development, I’m going to use a SQLite database. SQLite databases are the most convenient choice for developing small applications, sometimes even not so small ones, as each database is stored in a single file on disk and there is no need to run a database server like MySQL and PostgreSQL.

We have two new configuration items to add to the config file:

config.py: Flask-SQLAlchemy configuration

import os

basedir = os.path.abspath(os.path.dirname(__file__))

class Config(object):

# ...

SQLALCHEMY_DATABASE_URI = os.environ.get('DATABASE_URL') or \

'sqlite:///' + os.path.join(basedir, 'app.db')

SQLALCHEMY_TRACK_MODIFICATIONS = False

The Flask-SQLAlchemy extension takes the location of the application’s database from the SQLALCHEMY_DATABASE_URI configuration variable. As you recall from Chapter 3, it is in general a good practice to set configuration from environment variables, and provide a fallback value when the environment does not define the variable. In this case I’m taking the database URL from the DATABASE_URL environment variable, and if that isn’t defined, I’m configuring a database named app.db located in the main directory of the application, which is stored in the basedir variable.

The SQLALCHEMY_TRACK_MODIFICATIONS configuration option is set to False to disable a feature of Flask-SQLAlchemy that I do not need, which is to signal the application every time a change is about to be made in the database.

The database is going to be represented in the application by the database instance. The database migration engine will also have an instance. These are objects that need to be created after the application, in the app/__init__.py file:

app/__init__.py: Flask-SQLAlchemy and Flask-Migrate initialization

from flask import Flask

from config import Config

from flask_sqlalchemy import SQLAlchemy

from flask_migrate import Migrate

app = Flask(__name__)

app.config.from_object(Config)

db = SQLAlchemy(app)

migrate = Migrate(app, db)

from app import routes, models

I have made three changes to the init script. First, I have added a db object that represents the database. Then I have added another object that represents the migration engine. Hopefully you see a pattern in how to work with Flask extensions. Most extensions are initialized as these two. Finally, I’m importing a new module called models at the bottom. This module will define the structure of the database.

Database Models

The data that will be stored in the database will be represented by a collection of classes, usually called database models. The ORM layer within SQLAlchemy will do the translations required to map objects created from these classes into rows in the proper database tables.

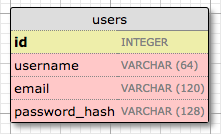

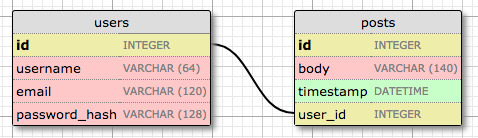

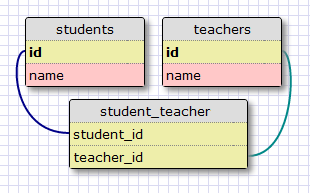

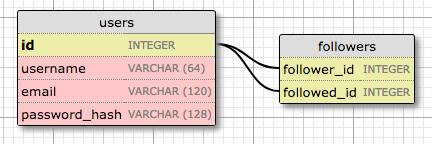

Let’s start by creating a model that represents users. Using the WWW SQL Designer tool, I have made the following diagram to represent the data that we want to use in the users table:

The id field is usually in all models, and is used as the primary key. Each user in the database will be assigned a unique id value, stored in this field. Primary keys are, in most cases, automatically assigned by the database, so I just need to provide the id field marked as a primary key.

The username, email and password_hash fields are defined as strings (or VARCHAR in database jargon), and their maximum lengths are specified so that the database can optimize space usage. While the username and email fields are self-explanatory, the password_hash fields deserves some attention. I want to make sure the application that I’m building adopts security best practices, and for that reason I will not be storing user passwords in the database. The problem with storing passwords is that if the database ever becomes compromised, the attackers will have access to the passwords, and that could be devastating for users. Instead of writing the passwords directly, I’m going to write password hashes, which greatly improve security. This is going to be the topic of another chapter, so don’t worry about it too much for now.

So now that I know what I want for my users table, I can translate that into code in the new app/models.py module:

app/models.py: User database model

from app import db

class User(db.Model):

id = db.Column(db.Integer, primary_key=True)

username = db.Column(db.String(64), index=True, unique=True)

email = db.Column(db.String(120), index=True, unique=True)

password_hash = db.Column(db.String(128))

def __repr__(self):

return '<User {}>'.format(self.username)

The User class created above inherits from db.Model, a base class for all models from Flask-SQLAlchemy. This class defines several fields as class variables. Fields are created as instances of the db.Column class, which takes the field type as an argument, plus other optional arguments that, for example, allow me to indicate which fields are unique and indexed, which is important so that database searches are efficient.

The __repr__ method tells Python how to print objects of this class, which is going to be useful for debugging. You can see the __repr__() method in action in the Python interpreter session below:

>>> from app.models import User

>>> u = User(username='susan', email='susan@example.com')

>>> u

<User susan>

Creating The Migration Repository

The model class created in the previous section defines the initial database structure (or schema) for this application. But as the application continues to grow, there is going to be a need change that structure, very likely to add new things, but sometimes also to modify or remove items. Alembic (the migration framework used by Flask-Migrate) will make these schema changes in a way that does not require the database to be recreated from scratch.

To accomplish this seemingly difficult task, Alembic maintains a migration repository, which is a directory in which it stores its migration scripts. Each time a change is made to the database schema, a migration script is added to the repository with the details of the change. To apply the migrations to a database, these migration scripts are executed in the sequence they were created.

Flask-Migrate exposes its commands through the flask command. You have already seen flask run, which is a sub-command that is native to Flask. The flask db sub-command is added by Flask-Migrate to manage everything related to database migrations. So let’s create the migration repository for microblog by running flask db init:

(venv) $ flask db init

Creating directory /home/miguel/microblog/migrations ... done

Creating directory /home/miguel/microblog/migrations/versions ... done

Generating /home/miguel/microblog/migrations/alembic.ini ... done

Generating /home/miguel/microblog/migrations/env.py ... done

Generating /home/miguel/microblog/migrations/README ... done

Generating /home/miguel/microblog/migrations/script.py.mako ... done

Please edit configuration/connection/logging settings in

'/home/miguel/microblog/migrations/alembic.ini' before proceeding.

Remember that the flask command relies on the FLASK_APP environment variable to know where the Flask application lives. For this application, you want to set FLASK_APP=microblog.py, as discussed in Chapter 1.

After you run this command, you will find a new migrations directory, with a few files and a versions sub-directory inside. All these files should be treated as part of your project from now on, and in particular, should be added to source control.

The First Database Migration

With the migration repository in place, it is time to create the first database migration, which will include the users table that maps to the User database model. There are two ways to create a database migration: manually or automatically. To generate a migration automatically, Alembic compares the database schema as defined by the database models, against the actual database schema currently used in the database. It then populates the migration script with the changes necessary to make the database schema match the application models. In this case, since there is no previous database, the automatic migration will add the entire User model to the migration script. The flask db migrate sub-command generates these automatic migrations:

(venv) $ flask db migrate -m "users table"

INFO [alembic.runtime.migration] Context impl SQLiteImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

INFO [alembic.autogenerate.compare] Detected added table 'user'

INFO [alembic.autogenerate.compare] Detected added index 'ix_user_email' on '['email']'

INFO [alembic.autogenerate.compare] Detected added index 'ix_user_username' on '['username']'

Generating /home/miguel/microblog/migrations/versions/e517276bb1c2_users_table.py ... done

The output of the command gives you an idea of what Alembic included in the migration. The first two lines are informational and can usually be ignored. It then says that it found a user table and two indexes. Then it tells you where it wrote the migration script. The e517276bb1c2 code is an automatically generated unique code for the migration (it will be different for you). The comment given with the -m option is optional, it adds a short descriptive text to the migration.

The generated migration script is now part of your project, and needs to be incorporated to source control. You are welcome to inspect the script if you are curious to see how it looks. You will find that it has two functions called upgrade() and downgrade(). The upgrade() function applies the migration, and the downgrade() function removes it. This allows Alembic to migrate the database to any point in the history, even to older versions, by using the downgrade path.

The flask db migrate command does not make any changes to the database, it just generates the migration script. To apply the changes to the database, the flask db upgrade command must be used.

(venv) $ flask db upgrade

INFO [alembic.runtime.migration] Context impl SQLiteImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

INFO [alembic.runtime.migration] Running upgrade -> e517276bb1c2, users table

Because this application uses SQLite, the upgrade command will detect that a database does not exist and will create it (you will notice a file named app.db is added after this command finishes, that is the SQLite database). When working with database servers such as MySQL and PostgreSQL, you have to create the database in the database server before running upgrade.

Note that Flask-SQLAlchemy uses a “snake case” naming convention for database tables by default. For the User model above, the corresponding table in the database will be named user. For a AddressAndPhone model class, the table would be named address_and_phone. If you prefer to choose your own table names, you can add an attribute named __tablename__ to the model class, set to the desired name as a string.

Database Upgrade and Downgrade Workflow

The application is in its infancy at this point, but it does not hurt to discuss what is going to be the database migration strategy going forward. Imagine that you have your application on your development machine, and also have a copy deployed to a production server that is online and in use.

Let’s say that for the next release of your app you have to introduce a change to your models, for example a new table needs to be added. Without migrations you would need to figure out how to change the schema of your database, both in your development machine and then again in your server, and this could be a lot of work.

But with database migration support, after you modify the models in your application you generate a new migration script (flask db migrate), you probably review it to make sure the automatic generation did the right thing, and then apply the changes to your development database (flask db upgrade). You will add the migration script to source control and commit it.

When you are ready to release the new version of the application to your production server, all you need to do is grab the updated version of your application, which will include the new migration script, and run flask db upgrade. Alembic will detect that the production database is not updated to the latest revision of the schema, and run all the new migration scripts that were created after the previous release.

As I mentioned earlier, you also have a flask db downgrade command, which undoes the last migration. While you will be unlikely to need this option on a production system, you may find it very useful during development. You may have generated a migration script and applied it, only to find that the changes that you made are not exactly what you need. In this case, you can downgrade the database, delete the migration script, and then generate a new one to replace it.

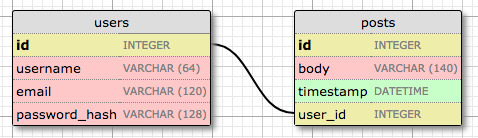

Database Relationships

Relational databases are good at storing relations between data items. Consider the case of a user writing a blog post. The user will have a record in the users table, and the post will have a record in the posts table. The most efficient way to record who wrote a given post is to link the two related records.

Once a link between a user and a post is established, the database can answer queries about this link. The most trivial one is when you have a blog post and need to know what user wrote it. A more complex query is the reverse of this one. If you have a user, you may want to know all the posts that this user wrote. Flask-SQLAlchemy will help with both types of queries.

Let’s expand the database to store blog posts to see relationships in action. Here is the schema for a new posts table:

The posts table will have the required id, the body of the post and a timestamp. But in addition to these expected fields, I’m adding a user_id field, which links the post to its author. You’ve seen that all users have a id primary key, which is unique. The way to link a blog post to the user that authored it is to add a reference to the user’s id, and that is exactly what the user_id field is. This user_id field is called a foreign key. The database diagram above shows foreign keys as a link between the field and the id field of the table it refers to. This kind of relationship is called a one-to-many, because “one” user writes “many” posts.

The modified app/models.py is shown below:

app/models.py: Posts database table and relationship

from datetime import datetime

from app import db

class User(db.Model):

id = db.Column(db.Integer, primary_key=True)

username = db.Column(db.String(64), index=True, unique=True)

email = db.Column(db.String(120), index=True, unique=True)

password_hash = db.Column(db.String(128))

posts = db.relationship('Post', backref='author', lazy='dynamic')

def __repr__(self):

return '<User {}>'.format(self.username)

class Post(db.Model):

id = db.Column(db.Integer, primary_key=True)

body = db.Column(db.String(140))

timestamp = db.Column(db.DateTime, index=True, default=datetime.utcnow)

user_id = db.Column(db.Integer, db.ForeignKey('user.id'))

def __repr__(self):

return '<Post {}>'.format(self.body)

The new Post class will represent blog posts written by users. The timestamp field is going to be indexed, which is useful if you want to retrieve posts in chronological order. I have also added a default argument, and passed the datetime.utcnow function. When you pass a function as a default, SQLAlchemy will set the field to the value of calling that function (note that I did not include the () after utcnow, so I’m passing the function itself, and not the result of calling it). In general, you will want to work with UTC dates and times in a server application. This ensures that you are using uniform timestamps regardless of where the users are located. These timestamps will be converted to the user’s local time when they are displayed.

The user_id field was initialized as a foreign key to user.id, which means that it references an id value from the users table. In this reference the user part is the name of the database table for the model. It is an unfortunate inconsistency that in some instances such as in a db.relationship() call, the model is referenced by the model class, which typically starts with an uppercase character, while in other cases such as this db.ForeignKey() declaration, a model is given by its database table name, for which SQLAlchemy automatically uses lowercase characters and, for multi-word model names, snake case.

The User class has a new posts field, that is initialized with db.relationship. This is not an actual database field, but a high-level view of the relationship between users and posts, and for that reason it isn’t in the database diagram. For a one-to-many relationship, a db.relationshipfield is normally defined on the “one” side, and is used as a convenient way to get access to the “many”. So for example, if I have a user stored in u, the expression u.posts will run a database query that returns all the posts written by that user. The first argument to db.relationship is the model class that represents the “many” side of the relationship. This argument can be provided as a string with the class name if the model is defined later in the module. The backref argument defines the name of a field that will be added to the objects of the “many” class that points back at the “one” object. This will add a post.author expression that will return the user given a post. The lazy argument defines how the database query for the relationship will be issued, which is something that I will discuss later. Don’t worry if these details don’t make much sense just yet, I’ll show you examples of this at the end of this article.

Since I have updates to the application models, a new database migration needs to be generated:

(venv) $ flask db migrate -m "posts table"

INFO [alembic.runtime.migration] Context impl SQLiteImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

INFO [alembic.autogenerate.compare] Detected added table 'post'

INFO [alembic.autogenerate.compare] Detected added index 'ix_post_timestamp' on '['timestamp']'

Generating /home/miguel/microblog/migrations/versions/780739b227a7_posts_table.py ... done

And the migration needs to be applied to the database:

(venv) $ flask db upgrade

INFO [alembic.runtime.migration] Context impl SQLiteImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

INFO [alembic.runtime.migration] Running upgrade e517276bb1c2 -> 780739b227a7, posts table

If you are storing your project in source control, also remember to add the new migration script to it.

Play Time

I have made you suffer through a long process to define the database, but I haven’t shown you how everything works yet. Since the application does not have any database logic yet, let’s play with the database in the Python interpreter to familiarize with it. So go ahead and fire up Python by running python. Make sure your virtual environment is activated before you start the interpreter.

Once in the Python prompt, let’s import the database instance and the models:

>>> from app import db

>>> from app.models import User, Post

Start by creating a new user:

>>> u = User(username='john', email='john@example.com')

>>> db.session.add(u)

>>> db.session.commit()

Changes to a database are done in the context of a session, which can be accessed as db.session. Multiple changes can be accumulated in a session and once all the changes have been registered you can issue a single db.session.commit(), which writes all the changes atomically. If at any time while working on a session there is an error, a call to db.session.rollback() will abort the session and remove any changes stored in it. The important thing to remember is that changes are only written to the database when db.session.commit() is called. Sessions guarantee that the database will never be left in an inconsistent state.

Let’s add another user:

>>> u = User(username='susan', email='susan@example.com')

>>> db.session.add(u)

>>> db.session.commit()

The database can answer a query that returns all the users:

>>> users = User.query.all()

>>> users

[<User john>, <User susan>]

>>> for u in users:

... print(u.id, u.username)

...

1 john

2 susan

All models have a query attribute that is the entry point to run database queries. The most basic query is that one that returns all elements of that class, which is appropriately named all(). Note that the id fields were automatically set to 1 and 2 when those users were added.

Here is another way to do queries. If you know the id of a user, you can retrieve that user as follows:

>>> u = User.query.get(1)

>>> u

<User john>

Now let’s add a blog post:

>>> u = User.query.get(1)

>>> p = Post(body='my first post!', author=u)

>>> db.session.add(p)

>>> db.session.commit()

I did not need to set a value for the timestamp field because that field has a default, which you can see in the model definition. And what about the user_id field? Recall that the db.relationship that I created in the User class adds a posts attribute to users, and also a author attribute to posts. I assign an author to a post using the author virtual field instead of having to deal with user IDs. SQLAlchemy is great in that respect, as it provides a high-level abstraction over relationships and foreign keys.

To complete this session, let’s look at a few more database queries:

>>> # get all posts written by a user

>>> u = User.query.get(1)

>>> u

<User john>

>>> posts = u.posts.all()

>>> posts

[<Post my first post!>]

>>> # same, but with a user that has no posts

>>> u = User.query.get(2)

>>> u

<User susan>

>>> u.posts.all()

[]

>>> # print post author and body for all posts

>>> posts = Post.query.all()

>>> for p in posts:

... print(p.id, p.author.username, p.body)

...

1 john my first post!